Solving Heap Memory Issues in Talend: A Complete Guide

Celestinfo Software Solutions Pvt. Ltd.

•

Aug 19, 2025

Talend jobs run on top of Java. The heap memory is the portion of RAM allocated to the JVM to store objects created during job execution.

Introduction

Heap memory is a crucial part of a computer’s memory used by programs to dynamically allocate memory during their execution. Unlike stack memory, which manages static memory allocation for method calls and local variables, heap memory provides the space needed for creating objects and variables whose size and lifespan may not be known at compile time. Managing heap memory as improper usage can lead to issues such as memory leaks or out-of-memory errors, which can cause programs to slow down or crash. Understanding how heap memory works is essential for developers, especially when working with data-intensive applications like those built in Talend.

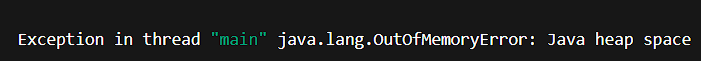

When working with large datasets or complex jobs in Talend, one of the most common errors developers encounter is the dreaded Java Heap Space error. It often appears as:

This error signals that Talend’s underlying Java Virtual Machine (JVM) has run out of memory. Left unresolved, it can cause jobs to fail, slow down, or even crash.

In this blog, let’s break down why heap memory issues occur in Talend, and how you can prevent and fix them effectively.

Common Causes of Heap Memory Issues in Talend

Processing huge files or datasets entirely in memory.

Improper component usage. -e.g tMap holding millions of rows without filtering.

Joins on large datasets using tMap instead of database-side joins.

Insufficient JVM memory allocation (default heap size too small).

Memory leaks caused by not releasing unused objects.

Best Practices to Fix Heap Memory Issues

1.Increase JVM Memory Allocation

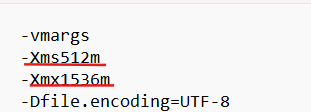

Go to Talend Studio → Talend.ini file (in your Talend installation folder).

If you want to permanently increase Talend Studio heap memory, you must edit the Talend-Studio.ini (file name: TOS_BD-win-x86_64.ini) file in the Talend installation folder. The given file name may be changes for user to user.

Increase the -Xms (initial heap size) and -Xmx (maximum heap size).

Here, heap memory starts at 512MB and can grow up to 1.5GB. Edit the values based on your system’s RAM. The values given for Xms should be lesser than Xmx. For job execution in Talend JobServer or Talend Administration Center (TAC), increase memory in the JVM parameters of the execution task.

2.Increase JVM Memory Allocation (Alternative Method)

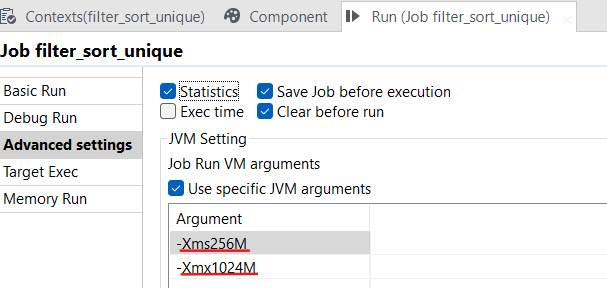

Open Your Job

In Talend Studio, open the job for which you want to increase memory.

Go to the Run View

At the bottom panel, click on the Run tab.

You’ll see sub-tabs like Run(Job), Component, Context.

Click Run tab.

Open the Advanced Settings

Inside the Run tab, click Advanced settings.

Scroll down until you find JVM Settings.

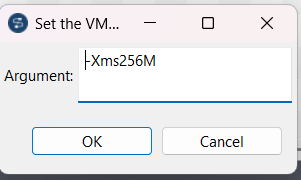

Set JVM Arguments (Heap Size)

In the JVM arguments box, add or modify the memory parameters:

-Xms512m → Initial memory allocation (start heap size).

-Xmx2048m → Maximum heap size (increase this as needed).

Values you can try depending on your system RAM:

Small jobs → -Xms512m -Xmx1024m

Medium jobs → -Xms1024m -Xmx2048m

Large jobs → -Xms2048m -Xmx4096m

This method increases memory only for this particular job’s run inside Studio.

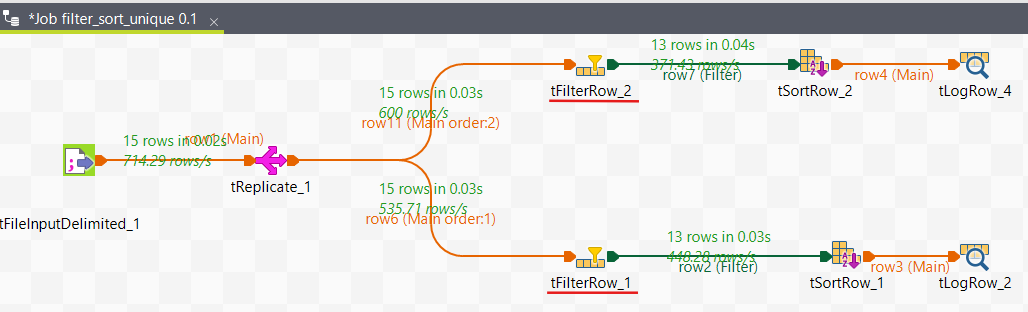

3. Optimize Job Design

Filter early: Use tFilterRow or conditions in tInput components to reduce data volume.

Push down transformations: Perform heavy joins/aggregations directly in the database using tELT components.

Use streaming: Instead of loading everything into memory, process records row by row.

In this image ,we use tfilterRow components to reduce data volume

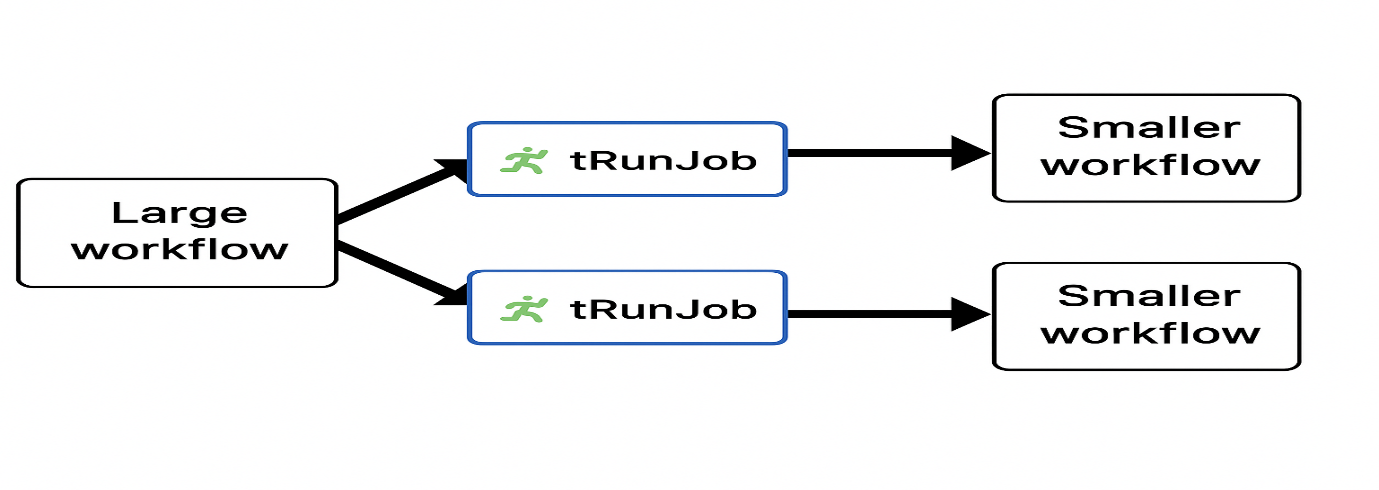

4. Break Down Large Jobs

Split large workflows into smaller subjobs using tRunJob.

Write intermediate data to temporary files or staging tables.

This reduces memory load on a single job

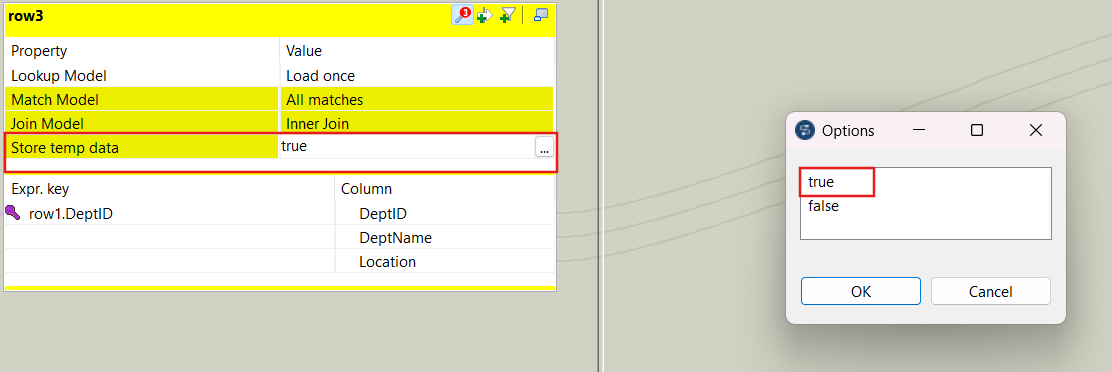

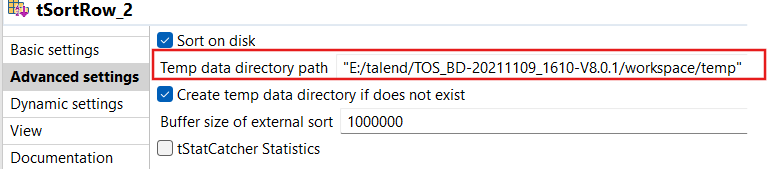

5. Use the Right Component Settings

In tMap, enable “Store temp data” option for large lookups (saves data on disk instead of RAM).

Use tSortRow with external sorting instead of in-memory sorting.

Real-World Example

A Talend job was failing with a heap memory error while processing a 2 GB CSV file. After investigation:

Default heap (512 MB - 1536MB) was too small.

The job used tMap to join two full datasets in memory.

Fix:

1. Increased heap size to -Xmx1536m to -Xmx4096m.

2. Added filtering in the input stage to reduce row volume.

Result:

The job completed successfully in under few minutes without heap memory issues.

Conclusion

Heap memory errors in Talend are common, but they’re not unfixable. By combining the right configuration (increasing JVM memory) with job design, best practices (filtering, modularization, database pushdown), you can build ETL jobs that are both efficient and stable. When working with large datasets, it’s important to design your jobs to use memory efficiently.